The New Economy

We have massively overallocated our youth to roles like software engineering and medicine - which will be shortly replaced en masse by AI. I propose how the working population should reallocate.

In this essay, I propose that the labor force will reallocate away from jobs like software engineering & medicine, and instead toward construction in the short-term and entertainment in the long-term.

Homelessness & joblessness is obviously a rampant problem in San Francisco; India's youth population (~400 million people) is 20%+ unemployed; and AGI will render millions of high-earners even in the United States (e.g. software engineers) without work.

These are all instances of the same problem. What I am interested in is a human equivalent of Bitcoin mining. Bitcoin mining allows us to take idle compute resources and contribute them toward something valuable. What can we do with idle people?

Refining the Problem Statement

AGI poses many interesting questions, but this is a separate question from:

- What goals should we align humanity around in the long-term? It may be arrogant to suggest that such alignment is even possible or desirable. Instead, the problem here describes a medium-term issue facing the labor market. AGI's role may not be to guide humanity toward one grand objective, but rather to support a diversity of purposes and individual goals.

- If universal basic income is implemented, how should we capture and re-distribute it? Unrelated, but a useful lens for the profiteer.

- How should we keep people entertained, so they don't go insane? Entertainment/fulfillment is valuable, but not the only thing to optimize for.

- What should we use "extra" (~$0 marginal cost) inference power for? The problem above refers to the "extra" people, not compute.

Even without full-blown AGI, a solution for economically unproductive people is useful even today as automation continues to displace workers.

When AGI fully arrives, access to AGI might not be globally democratized, and the economic surplus from AI won't be re-distributed purely to the same individuals who held those high-paying jobs. People might either prefer to operate at their previous level of income/wealth, or they might find it fulfilling to contribute toward something greater than themselves, so this question remains interesting to me.

What constitutes a valid solution?

1. Is the work valuable? "Value" might not be measured in dollars generated, and it might sometimes be debatable whether something is valuable. It might be measured in fulfillment. This criterion eliminates meaningless redistributions of wealth or digging holes to fill them up again.

2. Is the job scalable? The solution must be able to employ hundreds of thousands, if not millions of people.

3. Is it ethical? At the same time, unethical solutions are still worth highlighting only because they might lead to an ethical solution.

4. Is it AGI-resistant? The solution should still be useful even in a post-AGI world. This is difficult to predict and might not be possible. Solutions that aren't AGI-resistant are still useful in the interim period where masses are unemployed, but AGI cannot yet produce everything desired.

To satisfy the last criterion, a valid solution cannot rely on superior thinking ability to produce value. The timeframe matters for the last criterion, since certain tasks might take years longer to replace than others.

What are the possible solutions?

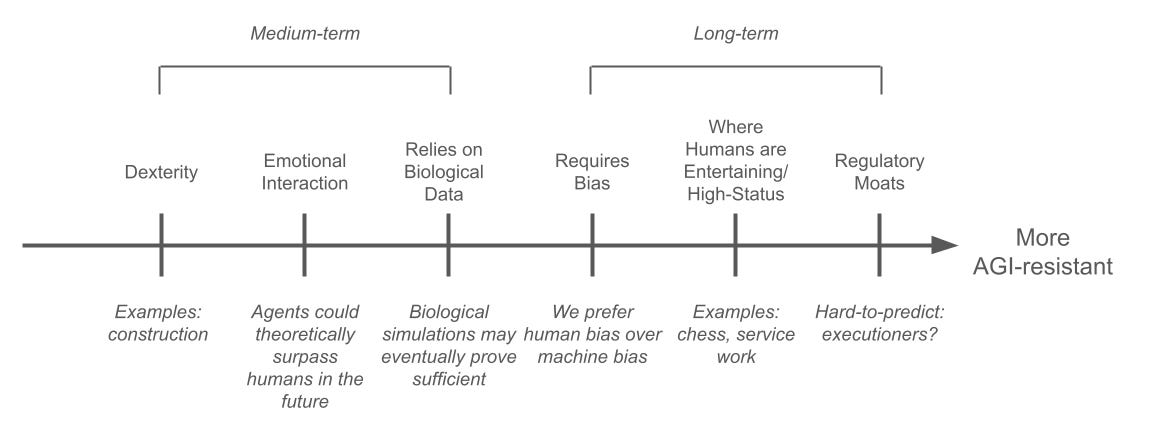

I've ranked the following attributes alongside my view of their AGI-resistance:

Below, I dive into each of these categories to illustrate what the reallocation of labor resources might look like:

Medium-Term Solutions

While fields like construction and elderly care are fulfilling, even these tasks can likely be replaced by agents/robotics on a reasonable timeframe. At the same time, construction in particular offers a compelling vision that can align and employ millions in the near-term.

1. Construction: Build something grand that justifies the use of manual labor.

An example here would be if India were to commission the development of a Taj Mahal 2. The Indian government would guarantee a living wage to anyone who contributed, and the development of such magnificent structures is worthwhile as works-of-art.

The United States administration should institute a "new" New Deal, where the government employs millions of Americans to develop bleeding-edge public infrastructure. This is the most compelling vision to me in the short-term.

This might involve directing resources toward the necessary construction for interstellar exploration and colonization: space ports, launch infrastructure, and habitats for human life on Mars.

This work would be valuable, scalable, and ethical, but not fully AGI-resistant.

2. Community work: Care for other humans, in capacities where humans are preferred.

I am interested in someone defining what it means to be healthy, and then designing the surrounding environment and checks to promote healthy child rearing. This comes from a concern that children who lack real human interaction in their upbringing will be emotionally and socially stunted.

This category might also include elderly care or running in-person, human-only communities.

3. Biological utility: Use your human body to produce biological data or materials.

Individuals like Bryan Johnson generate tremendous high-fidelity biological data that could theoretically be used to improve drug development. A more dystopian expression would be financially incentivizing experimental drug testing, since real human bodies will be superior to biological simulations or models for some period of time.

Another instance of this might be surrogates, if babies from surrogates are superior to lab-grown babies. We can reject unethical examples like organ donation or using the human metabolism for energy production, which is too metabolically inefficient anyway.

These roles are not particularly scalable, and they provoke ethical questions.

Long-Term Solutions

1. Bias mitigation: Some decisions inherently require bias, and we prefer these decisions be made by biased humans rather than biased models.

Such examples include the interpretation of the law/ethics, including judges, lawyers, or governance of systems related to AGI itself. This might also include oversight of some types of AGI output.

I don't view this as particularly scalable, and I'm concerned that human error rates might be too high to be useful.

2. Entertainment: Self-explanatory; entertain/serve other humans.

Unethical examples abound in this category, from prostitution to real-life Squid Games, gladiators, and so forth. But entertainment has so far proven resistance to AI alternatives. For example, while chess bots are definitively stronger than human players, we still prefer watching humans play, though widespread sentiment can of course change over time.

This includes the service industry, where it's higher status to have human labor over machines, or the Olympics where we even limit drugs that could potentially interfere with natural human performance. This work is valuable, scalable, and there are sufficient ethical examples.

How do we act on this?

I am concerned about a potential shock to the labor market where large segments of the population are rendered unemployed very quickly. I have serious doubts that governments would adapt to quickly issuing UBI for a variety of reasons. In my view, it is wise for us to proactively institute the relevant legislation (e.g. a "new" New Deal) or large-scale private funding to incentivize a shift toward roles that are sustainable in the medium-term.

I'm interested in hearing feedback and connecting with others who are interested in this problem space. You can reach me on Twitter at @neelsalami.